By William Dance, Alex Christiansen and Alexander Wild

Within corpus linguistics, multimodality is a subject which is often overlooked.

While there are multiple projects tackling multimodal interactional elements in corpora, such as the French interaction corpus RECOLA and the video meeting repository REPERE, corpus linguistic approaches generally tend to struggle when faced with extra-textual content such as images. Until now, the only viable approach to including such content in a corpus has been manual image annotation, but such an approach runs into two overarching issues: first, visual modality is the most labour-intensive form of multimodal corpus annotation when performed ‘by hand’ and second, multimodal corpora are often limited in scope and therefore remain very specialist using relatively small datasets.

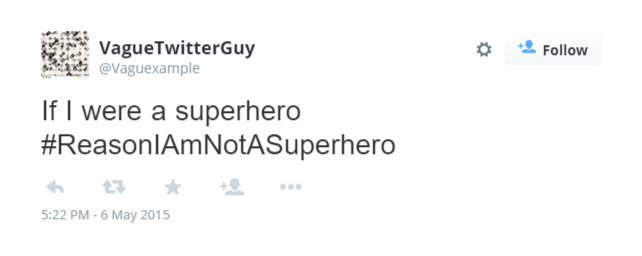

However, as Twitter and other social media are quickly becoming popular sources of natural text, it is important to recognise that ignoring images means ignoring a large portion of potential meaning. In the worst instances, texts become entirely meaningless without the context supplied by the image – take for example this relatively innocuous tweet about superheroes:

without its image content.

As opposed to with it.

The image in the example above comprises part of the meaning making process and without the image, meaning is lost. Although evidence of the number of posts which include images are scarce, an engagement analysis sampling 1,000,000 posts from Twitter tentatively noted that 42% included an image.

As a step towards fixing this omission, we are introducing a new methodological tool to the corpus linguistic toolbox, tentatively named Visual Constituent Analysis or simply VCA. As the name implies, the approach draws from the concept of grammatical constituencies, presenting images as a series of individual semiotic constituents, which can then be shown in-line with any co-text found in the tweet. Using Google’s Cloud Vision API, VCA seeks to redress the issues raised earlier of scalability and scope by automating the annotation process and consequently widening the research scope, allowing studies to be extended to a much larger portion of multimodal data with very little extra work involved.

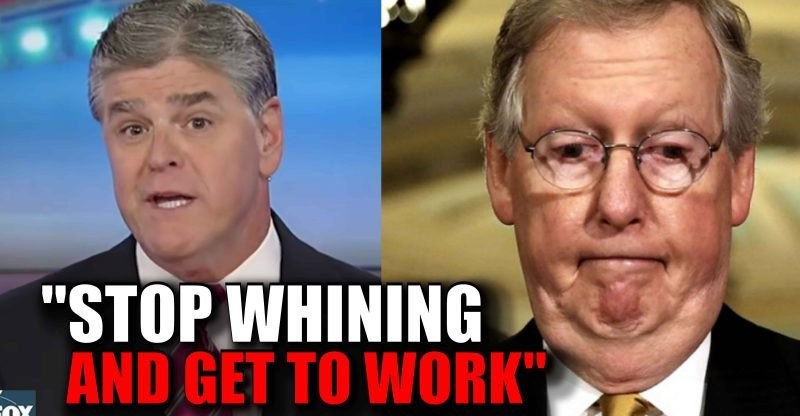

In addition to extending the scale of analysis, Vision also supplies information that would otherwise be missed by most annotators. This includes the function called web entities, which retrieves the set of all indexed web-pages using a particular image and extracts the most representative keywords from the context the image was used in. As an example, note that in the sample image below Vision detects only that the image contains a ‘journalist’/’commentator’ and that there is a ‘photo caption’, while web entities highlight that the people in question are Sean Hannity and Mitch McConnel, as well as the fact that the image relates to Fox News and the Speaker of the United States’ House of Congress.

Input

Output

| Labels | Journalist; Commentator; Facial Expression; Person; Forehead; Photo Caption; Chin; Official |

| Web Entities | Sean Hannity; Mitch McConnell; FOX News; Kentucky; United States Senate; Republican Party; Capigruppo al Senato degli Stati Uniti d’America; Speaker of the United States House; United States Congress; Election; President of the United States |

| Document | “STOP WHINING AND GET TO WORK” |

While we recognise that there are obvious issues with allowing an algorithm to take over the task of annotating images, we posit that the same issues are inherent to human annotation, perhaps to an even larger degree. Within the traditional annotation method, a human element is required to process the non-textual data by hand, with implications of scale, consistency and knowledge-base. Vision offers vast scalability as well as the web indexing power of Google and consequently can help to analyse large multimodal datasets that would require teams of human annotators to process.

To test the viability of the approach as well as the reliability of the data-labelling supplied by Google’s neural network, we will use VCA to analyse the use of images in hostile-state information operations on social media in Twitter’s recently released Internet Research Agency dataset (T-IRA). T-IRA includes all the users identified by Twitter as being connected to Russian state-backed information operations and measures more than 9 million tweets, including a database of more than 1.4 million images.

This project will test the viability of VCA as a method of corpus construction but will also provide insights into how information operations weaponise images on social media. Using VCA, we will seek to identify the strategies used in T-IRA to try and influence people’s political and social views. Looking at studies of online disinformation as well as linguistic studies of manipulation these strategies will be codified into a typology of online image-based manipulation.